This post migrates Backstage backend monitoring from kube-prometheus-stack (ServiceMonitor + Prometheus scrape) to an LGTM-style push pipeline:

Backstage (OpenTelemetry SDK) → OTLP/HTTP → Alloy → Prometheus remote_write → Mimir → Grafana

Alloy is used as a push-only OpenTelemetry gateway. Backstage no longer exposes /metrics, and Prometheus Operator no longer scrapes it.

This post is based on

Lab context

- Kubernetes namespaces:

- Backstage:

backstage - Observability (LGTM):

observability

- Backstage:

- Alloy OTLP/HTTP receiver (in-cluster):

http://lgtm-alloy.observability.svc:4318 - Mimir gateway endpoints (in-cluster):

remote_write: http://lgtm-mimir-gateway.observability.svc:80/api/v1/push - Grafana datasource: Mimir (already provisioned in the “Install Grafana …” post)

What you’ll get

- Remove ServiceMonitor and the Backstage

/metricsendpoint - Backstage pushes telemetry via OTLP/HTTP to Alloy

- Alloy forwards metrics to Mimir using Prometheus remote_write

- Metrics become queryable in Grafana (Mimir datasource) using OpenTelemetry labels such as:

service_name="homelab-backstage"service_namespace="backstage"

Why push-only (OTLP) instead of ServiceMonitor scrape?

Prometheus scrape works well, but it couples every application to scrape endpoints and Prometheus Operator configuration. In an LGTM-style stack, it’s common to use a push gateway (Alloy) so apps only need OTLP exporters, and the routing logic (metrics/logs/traces) lives in one place.

- Switch Backstage metrics exporter from Prometheus to OTLP/HTTP

- Update backend dependencies

From your repo root:

# Add OTLP metrics exporter

yarn --cwd packages/backend add @opentelemetry/exporter-metrics-otlp-http

# Remove Prometheus exporter (no longer needed)

yarn --cwd packages/backend remove @opentelemetry/exporter-prometheus

# Ensure lockfile is updated

yarn install

- Update

packages/backend/src/instrumentation.js

Replace the Prometheus exporter with OTLP exporters and a periodic metric reader.

Use the full file below:

const { isMainThread } = require('node:worker_threads');

if (isMainThread) {

const { NodeSDK } = require('@opentelemetry/sdk-node');

const { getNodeAutoInstrumentations } = require('@opentelemetry/auto-instrumentations-node');

const { OTLPTraceExporter } = require('@opentelemetry/exporter-trace-otlp-http');

const { OTLPMetricExporter } = require('@opentelemetry/exporter-metrics-otlp-http');

const { PeriodicExportingMetricReader } = require('@opentelemetry/sdk-metrics');

// OTLP exporters read standard OTEL_* env vars (endpoint, headers, etc.)

const traceExporter = new OTLPTraceExporter();

const metricExporter = new OTLPMetricExporter();

const metricReader = new PeriodicExportingMetricReader({

exporter: metricExporter,

exportIntervalMillis: 60000,

});

const sdk = new NodeSDK({

traceExporter,

metricReader,

instrumentations: [getNodeAutoInstrumentations()],

});

sdk.start();

process.on('SIGTERM', async () => {

try {

await sdk.shutdown();

} finally {

process.exit(0);

}

});

}

- Update Kubernetes manifests (remove scrape, add OTEL env)

Remove the ServiceMonitor

Delete the file:

kubernetes/backstage-servicemonitor.yaml

And remove it from kubernetes/kustomization.yaml:

resources:

- homelab-backstage.yaml

Remove the Backstage /metrics port

In kubernetes/homelab-backstage.yaml:

- remove container port

9464 - remove service port

9464

This makes the deployment scrape-free.

Add OTEL env vars to the Backstage container

In the Backstage Deployment (container spec):

env:

- name: OTEL_SERVICE_NAME

value: homelab-backstage

- name: OTEL_RESOURCE_ATTRIBUTES

value: service.namespace=backstage

- name: OTEL_EXPORTER_OTLP_ENDPOINT

value: http://lgtm-alloy.observability.svc:4318

Optional (explicit protocol):

- name: OTEL_EXPORTER_OTLP_PROTOCOL

value: http/protobuf

- Deploy

Build/push your Backstage image as usual (Docker multi-stage build pipeline) and deploy via your normal workflow (kubectl apply / Argo CD sync).

- Verify the pipeline (Backstage → Alloy → Mimir)

Confirm Backstage env vars in the running pod

kubectl -n backstage exec deploy/homelab-backstage -- printenv | grep ^OTEL_

Confirm Alloy is receiving OTLP metrics

curl -fsS https://alloy.maksonlee.com/metrics | \

egrep 'otelcol_receiver_(accepted|refused)_metric_points_total' | head

Expected:

- accepted increases over time

- refused stays at 0

Confirm Alloy is remote-writing to Mimir

curl -fsS https://alloy.maksonlee.com/metrics | \

egrep 'prometheus_remote_storage_(samples_total|samples_failed_total|queue_highest_sent_timestamp_seconds|enqueue_retries_total)' | head -n 50

Expected:

samples_totalincreasessamples_failed_totalremains 0- queue timestamps move forward

Confirm metrics exist in Mimir (Grafana Explore)

In Grafana → Explore → datasource Mimir, run:

count({service_name="homelab-backstage"})

If it returns a value, Backstage metrics are stored in Mimir and queryable from Grafana.

- Verify metrics in Grafana

At this point, Backstage metrics should be flowing

Backstage (OTLP) → Alloy (OTLP receiver) → Alloy Prometheus remote_write → Mimir → Grafana

- Open Grafana → Explore → select the Mimir datasource.

- Run a quick sanity query (any of these works):

count({service_name="homelab-backstage"})

or (pick a known metric):

catalog_entities_count{service_name="homelab-backstage"}

- Open your Backstage dashboard (or build one) and confirm panels populate.

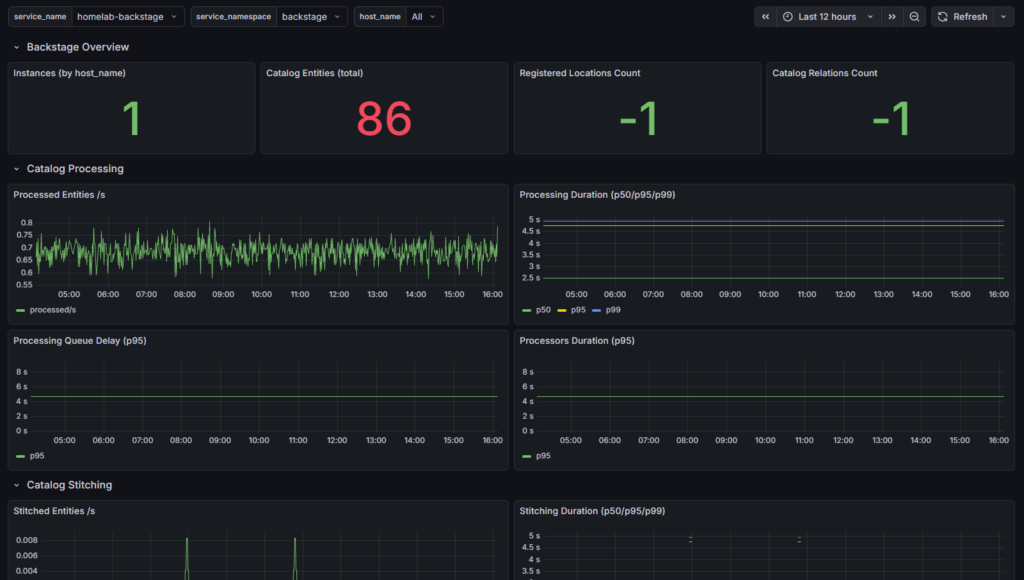

Example (working Backstage metrics in Grafana):

Did this guide save you time?

Support this site