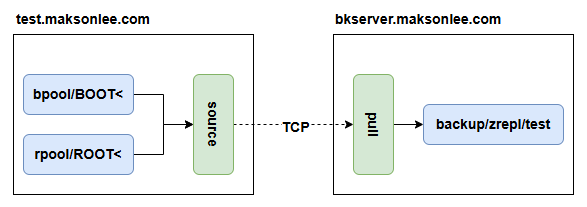

A clean, cron-free way to back up a ZFS-root Ubuntu server: run zrepl on a backup box and pull snapshots over TCP from the source. The source only runs a lightweight zrepl server; restores are simple via zfs clone.

Tested with:

- Source:

test.maksonlee.com— Ubuntu 24.04, ZFS root:bpool,rpool, IP 192.168.0.92 - Backup:

bkserver.maksonlee.com— Ubuntu 24.04, poolbackup, IP 192.168.0.91

Security note: type: tcp is clear-text and authenticates clients by IP mapping. Use only on trusted LANs, or put the link behind WireGuard/OpenVPN/IPsec, or use zrepl’s type: tls transport for mTLS.

What you get

- Periodic pull from backup → source over TCP

- Replicates periodic snapshots

- Placeholder datasets on the backup so nothing is auto-mounted

- Pruning on both sides (useful recency grid)

- Install zrepl on both hosts (official repo)

(

set -ex

zrepl_apt_key_url=https://zrepl.cschwarz.com/apt/apt-key.asc

zrepl_apt_key_dst=/usr/share/keyrings/zrepl.gpg

zrepl_apt_repo_file=/etc/apt/sources.list.d/zrepl.list

# Install dependencies for subsequent commands

sudo apt update && sudo apt install -y curl gnupg lsb-release

# Deploy the zrepl apt key.

curl -fsSL "$zrepl_apt_key_url" | tee | gpg --dearmor | sudo tee "$zrepl_apt_key_dst" > /dev/null

# Add the zrepl apt repo.

ARCH="$(dpkg --print-architecture)"

CODENAME="$(lsb_release -i -s | tr '[:upper:]' '[:lower:]') $(lsb_release -c -s | tr '[:upper:]' '[:lower:]')"

echo "Using Distro and Codename: $CODENAME"

echo "deb [arch=$ARCH signed-by=$zrepl_apt_key_dst] https://zrepl.cschwarz.com/apt/$CODENAME main" | sudo tee "$zrepl_apt_repo_file" > /dev/null

# Update apt repos & install zrepl.

sudo apt update

sudo apt install -y zrepl

zrepl version

)- Open the port (source → listens, backup → connects)

On test (192.168.0.92), allow bkserver to reach TCP/8888:

# If using ufw:

sudo ufw allow from 192.168.0.91 to any port 8888 proto tcp- Source config (test) — serve over TCP + take snapshots

/etc/zrepl/zrepl.yml

global:

logging:

- type: syslog

format: human

level: info

jobs:

- type: source

name: source_for_bkserver

# Source takes snapshots automatically; pull will replicate them.

snapshotting:

type: periodic

prefix: zrepl_

interval: 5m

serve:

type: tcp

listen: "0.0.0.0:8888"

clients:

"192.168.0.91": "bkserver.maksonlee.com"

filesystems:

"rpool/ROOT<": true

"bpool/BOOT<": trueApply & start:

sudo zrepl configcheck

sudo systemctl enable --now zrepl

# Confirm the listener:

ss -lntp | grep 8888 || sudo journalctl -u zrepl -n 100 --no-pager- Create the backup pool & placeholders (bkserver)

These commands wipe your selected disk. Use your own by-path symlink.

# On bkserver

# Inspect disks

lsblk

# Use a stable device path for sdb (adjust the grep target to your disk)

ls -l /dev/disk/by-path/ | grep 'sdb$'

# Create pool (WILL WIPE THE DISK)

sudo zpool create -o ashift=12 -o autotrim=on \

-O atime=off -O compression=zstd -O mountpoint=none \

backup /dev/disk/by-path/<your-sdb-by-path-symlink>

# Optional: cold-store tuning to save ARC

sudo zfs set primarycache=metadata backup

# Keep pool root clean (not auto-mounted)

sudo zfs set canmount=off mountpoint=none backup

# Browsing root (optional)

sudo zfs create -o mountpoint=/backup backup/zrepl

# One container per source host; leaves are created by receive

sudo zfs create -o canmount=off -o mountpoint=none backup/zrepl/test

# Verify

zpool status

zpool list

zfs list -o name,mountpoint,canmount backup- Pull job on backup (bkserver) — connect over TCP

/etc/zrepl/zrepl.yml on bkserver:

global:

logging:

- type: syslog

format: human

level: info

jobs:

- type: pull

name: pull_from_test

connect:

type: tcp

address: "192.168.0.92:8888"

# Where to receive the datasets from 'test'

root_fs: "backup/zrepl/test"

# Create placeholders with explicit encryption policy:

# Option A (default in this post): unencrypted placeholders

recv:

placeholder:

encryption: off

interval: 15m

pruning:

keep_sender:

- type: last_n

count: 28

keep_receiver:

- type: grid

grid: "24x1h | 7x1d | 4x1w | 12x30d"

regex: "^zrepl_.*"

replication:

protection:

initial: guarantee_resumability

incremental: guarantee_resumabilityApply & trigger first run:

sudo zrepl configcheck

sudo systemctl enable --now zrepl

sudo zrepl signal wakeup pull_from_test- Verify replication

# Watch logs

journalctl -u zrepl -n 200 --no-pager

# Confirm received snapshots

zfs list -t snapshot -r backup/zrepl/test | head -n 40You should see fresh zrepl_... snapshots updating every 15 minutes. Pruning runs after replication.

- Restore (fast) via

zfs clone

# 7.1 Find a snapshot on the receiver

zfs list -t snapshot -r backup/zrepl/test | head

# 7.2 Clone a snapshot under backup/zrepl/*

SNAP=backup/zrepl/test/rpool/ROOT/<your_dataset>@<your_snapshot>

sudo zfs clone "$SNAP" backup/zrepl/restore-test

# 7.3 Verify it mounted under /backup

zfs list -o name,mountpoint,mounted backup/zrepl/restore-test

ls -la /backup/restore-test | head

# 7.4 Cleanup when done

sudo zfs destroy backup/zrepl/restore-test- Add more machines

For each additional source host:

- On the source, add its backup IP to the

serve.clientsmap with a unique identity. - On bkserver, create

backup/zrepl/<host>(canmount=off, mountpoint=none) and add another pull job withroot_fs: backup/zrepl/<host>andconnect.address: "<source-ip>:8888". - Keep the same pruning grid or adjust per host.

Did this guide save you time?

Support this site