Introduction

In the previous post, How to Configure Ceph RGW Multisite with HAProxy and Let’s Encrypt on Ubuntu 24.04, we set up a Ceph RGW multisite deployment with TLS encryption and HAProxy-based routing.

This follow-up covers how to verify that object versioning and replication work as expected across zones by:

- Creating a versioned bucket

- Uploading object versions from different zones

- Verifying version history and default version selection

Prerequisites

- A fully configured Ceph RGW multisite cluster

- Two zones accessible at:

https://ceph-zone1.maksonlee.comhttps://ceph-zone2.maksonlee.com

- Valid TLS certificates via HAProxy + Let’s Encrypt

awscliandjqinstalled

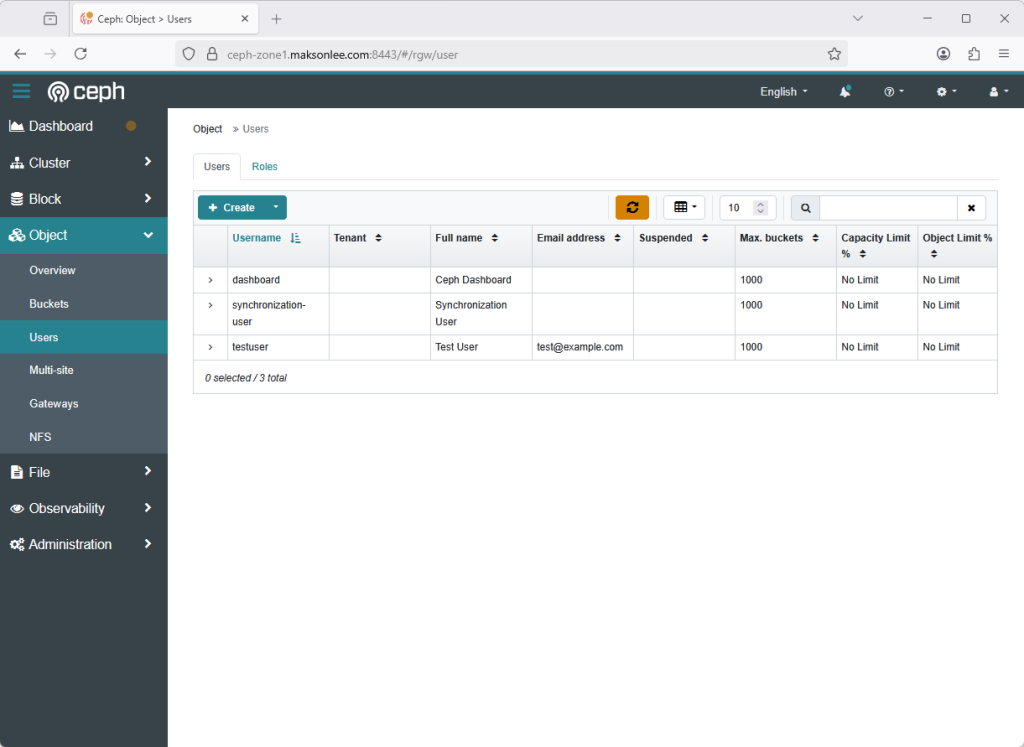

- Create a Test User (on Zone1)

sudo docker exec -it ceph-mon-zone1 radosgw-admin user create \

--uid="testuser" \

--display-name="Test User" \

--email="test@example.com" \

--access-key="TESTACCESSKEY123456" \

--secret="TESTSECRETKEY1234567890"

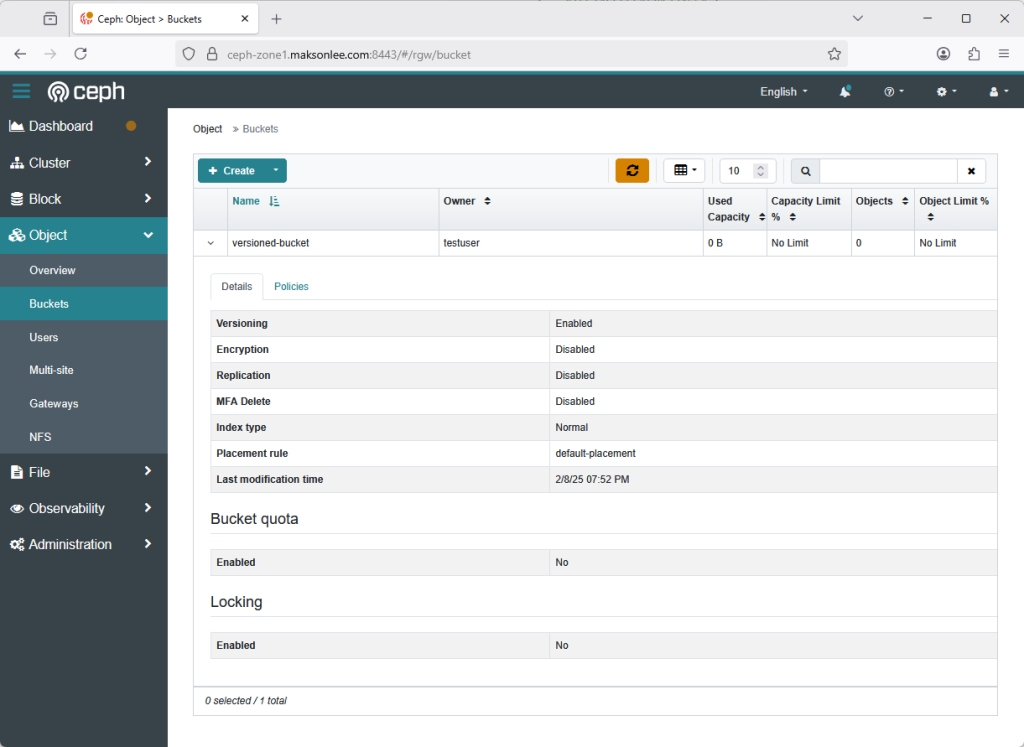

- Create a Versioned Bucket

Set up your environment:

export AWS_ACCESS_KEY_ID=TESTACCESSKEY123456

export AWS_SECRET_ACCESS_KEY=TESTSECRETKEY1234567890

export AWS_ENDPOINT=https://ceph-zone1.maksonlee.comThen:

aws --endpoint-url $AWS_ENDPOINT s3api create-bucket --bucket versioned-bucket

aws --endpoint-url $AWS_ENDPOINT s3api put-bucket-versioning \

--bucket versioned-bucket \

--versioning-configuration Status=EnabledWait a few seconds for replication to reach zone2.

- Upload Version 1 from Zone1

echo "version1 from zone1" > file.txt

aws --endpoint-url $AWS_ENDPOINT s3 cp file.txt s3://versioned-bucket/file.txt- Upload Version 2 from Zone2

Switch to zone2:

export AWS_ENDPOINT=https://ceph-zone2.maksonlee.com

echo "version2 from zone2" > file.txt

aws --endpoint-url $AWS_ENDPOINT s3 cp file.txt s3://versioned-bucket/file.txt- List Object Versions in Both Zones

export AWS_ENDPOINT=https://ceph-zone1.maksonlee.com

aws --endpoint-url $AWS_ENDPOINT s3api list-object-versions \

--bucket versioned-bucket --prefix file.txt{

"Versions": [

{

"ETag": "\"2de7d20a70570640084e8f757369d289\"",

"Size": 20,

"StorageClass": "STANDARD",

"Key": "file.txt",

"VersionId": "faEJxMq.a2XKRSA5-zRL11-1sZwe1gk",

"IsLatest": true,

"LastModified": "2025-08-02T12:00:34.723000+00:00",

"Owner": {

"DisplayName": "Test User",

"ID": "testuser"

}

},

{

"ETag": "\"adca38cf7cd7a761be684245f1292a36\"",

"Size": 20,

"StorageClass": "STANDARD",

"Key": "file.txt",

"VersionId": "QCePdU8t4MyGjC-cuUSa7ZjNHgL8nbz",

"IsLatest": false,

"LastModified": "2025-08-02T11:54:20.625000+00:00",

"Owner": {

"DisplayName": "Test User",

"ID": "testuser"

}

}

],

"RequestCharged": null,

"Prefix": "file.txt"

}export AWS_ENDPOINT=https://ceph-zone2.maksonlee.com

aws --endpoint-url $AWS_ENDPOINT s3api list-object-versions \

--bucket versioned-bucket --prefix file.txt{

"Versions": [

{

"ETag": "\"2de7d20a70570640084e8f757369d289\"",

"Size": 20,

"StorageClass": "STANDARD",

"Key": "file.txt",

"VersionId": "faEJxMq.a2XKRSA5-zRL11-1sZwe1gk",

"IsLatest": true,

"LastModified": "2025-08-02T12:00:34.723000+00:00",

"Owner": {

"DisplayName": "Test User",

"ID": "testuser"

}

},

{

"ETag": "\"adca38cf7cd7a761be684245f1292a36\"",

"Size": 20,

"StorageClass": "STANDARD",

"Key": "file.txt",

"VersionId": "QCePdU8t4MyGjC-cuUSa7ZjNHgL8nbz",

"IsLatest": false,

"LastModified": "2025-08-02T11:54:20.625000+00:00",

"Owner": {

"DisplayName": "Test User",

"ID": "testuser"

}

}

],

"RequestCharged": null,

"Prefix": "file.txt"

}- What is the Default Version?

In each zone, Ceph RGW treats the last written or replicated version as the default — the one you get when calling get-object without a version ID.

| Zone | Default version immediately after upload |

|---|---|

| Zone1 | v1 (until v2 syncs in) |

| Zone2 | v2 (written locally) |

Eventually, both zones will return v2.

Check It:

export AWS_ENDPOINT=https://ceph-zone1.maksonlee.com

aws --endpoint-url $AWS_ENDPOINT s3 cp s3://versioned-bucket/file.txt file_zone1.txtdownload: s3://versioned-bucket/file.txt to ./file_zone1.txtcat file_zone1.txtversion2 from zone2export AWS_ENDPOINT=https://ceph-zone2.maksonlee.com

aws --endpoint-url $AWS_ENDPOINT s3 cp s3://versioned-bucket/file.txt file_zone2.txtdownload: s3://versioned-bucket/file.txt to ./file_zone2.txtcat file_zone2.txtversion2 from zone2diff file_zone1.txt file_zone2.txt- Metadata Waits for Data Sync

To see how Ceph delays metadata visibility until data fully syncs:

- Upload a large file to Zone1:

export AWS_ENDPOINT=https://ceph-zone1.maksonlee.com

aws --endpoint-url $AWS_ENDPOINT s3 cp ubuntu-24.04.2-live-server-amd64.iso s3://versioned-bucket/ubuntu-24.04.2-live-server-amd64.isoWait for the command to complete, this ensures the object is fully written to Zone1.

- Immediately after upload completes, check Zone2:

export AWS_ENDPOINT=https://ceph-zone2.maksonlee.com

aws --endpoint-url $AWS_ENDPOINT s3api list-object-versions \

--bucket versioned-bucket --prefix ubuntu-24.04.2-live-server-amd64.isoYou’ll likely get:

{

"RequestCharged": null,

"Prefix": "ubuntu-24.04.2-live-server-amd64.iso"

}metadata hasn’t synced because the content hasn’t finished replicating.

- Wait and try again:

Depending on your file size, WAN throughput, and RGW load, replication may take several minutes or even hours.

Periodically check:

aws --endpoint-url $AWS_ENDPOINT s3api list-object-versions \

--bucket versioned-bucket --prefix ubuntu-24.04.2-live-server-amd64.isoOnce replication completes, version info will appear in Zone2.

{

"Versions": [

{

"ETag": "\"8ada8296a8c06bd07dd627027b3a7629-384\"",

"Size": 3213064192,

"StorageClass": "STANDARD",

"Key": "ubuntu-24.04.2-live-server-amd64.iso",

"VersionId": "xZT7ZypGzdvnQ1stzPCCVc-3Z1UZ4jQ",

"IsLatest": true,

"LastModified": "2025-08-03T09:11:32.293000+00:00",

"Owner": {

"DisplayName": "Test User",

"ID": "testuser"

}

}

],

"RequestCharged": null,

"Prefix": "ubuntu-24.04.2-live-server-amd64.iso"

}Conclusion

This test confirms:

- Object versioning is replicated across zones

- You can write from either zone and see updates in both

- The “default” version is zone-local and determined by write/sync timing

- Metadata is only made visible in the destination zone after object data is fully synced

By validating this behavior, you can confidently rely on Ceph RGW multisite for geo-redundant S3 workloads and understand its eventual consistency guarantees.

Did this guide save you time?

Support this site