Introduction

In my previous post I showed how to run Kafka 4.0 + HAProxy on a single Ubuntu 24.04 node with TLS termination in HAProxy while Kafka itself stays in PLAINTEXT.

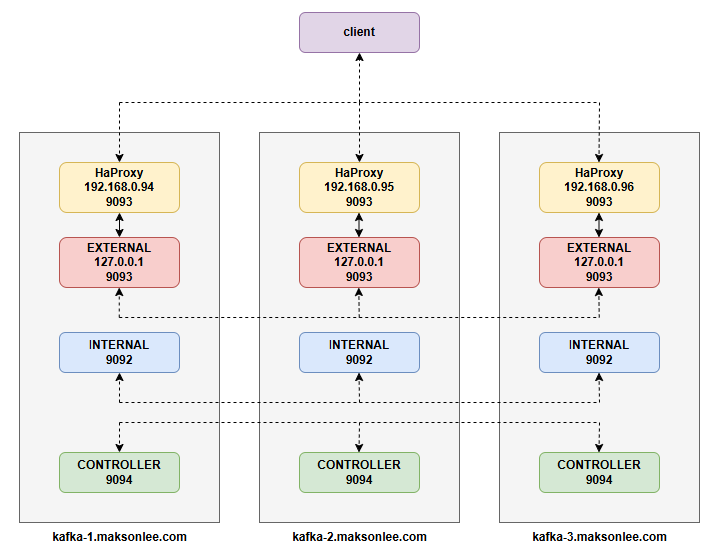

This post scales that idea to a 3-node Kafka 4.1 cluster:

- 3 Kafka nodes, all running in KRaft mode (no ZooKeeper)

- Each node has:

INTERNALlistener on 9092 for broker-to-broker trafficEXTERNALlistener on 127.0.0.1:9093 for local HAProxyCONTROLLERlistener on 9094 for the KRaft quorum

- Each node runs HAProxy which:

- Listens on

<node-ip>:9093with TLS - Proxies to Kafka’s PLAINTEXT EXTERNAL listener at

127.0.0.1:9093

- Listens on

- Clients connect with SSL to all three nodes (no extra load balancer).

Kafka itself never sees TLS – it only talks PLAINTEXT – but all client traffic is encrypted on the wire.

Target Architecture

I use three Ubuntu 24.04 servers:

| Node | Hostname | IP |

|---|---|---|

| 1 | kafka-1.maksonlee.com | 192.168.0.94 |

| 2 | kafka-2.maksonlee.com | 192.168.0.95 |

| 3 | kafka-3.maksonlee.com | 192.168.0.96 |

Each node looks like this:

- HAProxy:

192.168.0.X:9093(TLS, client-facing) - Kafka EXTERNAL:

127.0.0.1:9093(PLAINTEXT, for HAProxy only) - Kafka INTERNAL:

:9092(PLAINTEXT, broker-to-broker) - Kafka CONTROLLER:

:9094(PLAINTEXT, KRaft)

Prerequisites

- Three fresh Ubuntu 24.04 servers

- DNS A records:

kafka-1.maksonlee.com → 192.168.0.94

kafka-2.maksonlee.com → 192.168.0.95

kafka-3.maksonlee.com → 192.168.0.96- A Cloudflare API token (for DNS-01 validation via Certbot)

sudoaccess on each node

All commands are run as a sudo-capable user (e.g. ubuntu).

- Install Java & Kafka on All Nodes

Run these on each of kafka-1, kafka-2, and kafka-3:

sudo apt update

sudo apt install -y openjdk-21-jdk

cd /opt

sudo curl -LO https://dlcdn.apache.org/kafka/4.1.0/kafka_2.13-4.1.0.tgz

sudo tar -xzf kafka_2.13-4.1.0.tgz

sudo mv kafka_2.13-4.1.0 kafka

sudo mkdir -p /var/lib/kafka/kraft-combined-logs- Kafka Cluster Configuration (KRaft, 3 Nodes)

We’ll configure Kafka so that:

process.roles=broker,controller(combined role)INTERNAL,EXTERNAL, andCONTROLLERlisteners are used- KRaft quorum uses three controllers on port 9094

- Internal topics (

__consumer_offsets, transactions, etc.) have replication factor 3

- Common settings

On each node, edit:

sudo vi /opt/kafka/config/server.propertiesKeep the comments if you like, but make sure these properties exist and match:

############################# Server Basics #############################

# KRaft combined broker + controller

process.roles=broker,controller

# List of controller endpoints used to bootstrap the KRaft quorum

controller.quorum.bootstrap.servers=192.168.0.94:9094,192.168.0.95:9094,192.168.0.96:9094

############################# Socket Server Settings #############################

# Listeners

listeners=INTERNAL://:9092,EXTERNAL://127.0.0.1:9093,CONTROLLER://:9094

# Inter-broker listener

inter.broker.listener.name=INTERNAL

# Controller listener (required for KRaft)

controller.listener.names=CONTROLLER

# Listener → security protocol mapping (all PLAINTEXT)

listener.security.protocol.map=INTERNAL:PLAINTEXT,EXTERNAL:PLAINTEXT,CONTROLLER:PLAINTEXT

############################# Log Basics #############################

log.dirs=/var/lib/kafka/kraft-combined-logs

# Default partitions per topic

num.partitions=3

############################# Internal Topic Settings #############################

# Replication factors for important internal topics (3-node cluster)

offsets.topic.replication.factor=3

transaction.state.log.replication.factor=3

transaction.state.log.min.isr=2

############################# KRaft Quorum #############################

controller.quorum.voters=1@192.168.0.94:9094,2@192.168.0.95:9094,3@192.168.0.96:9094- Node-specific settings

Now set node.id and advertised.listeners per node.

kafka-1 (192.168.0.94)

node.id=1

advertised.listeners=INTERNAL://192.168.0.94:9092,EXTERNAL://kafka-1.maksonlee.com:9093kafka-2 (192.168.0.95)

node.id=2

advertised.listeners=INTERNAL://192.168.0.95:9092,EXTERNAL://kafka-2.maksonlee.com:9093kafka-3 (192.168.0.96)

node.id=3

advertised.listeners=INTERNAL://192.168.0.96:9092,EXTERNAL://kafka-3.maksonlee.com:9093Everything else can stay as in the default config/kraft/server.properties template.

- Create a Single Cluster ID and Format Storage

You must use one shared cluster ID for all 3 nodes.

On one node (e.g. kafka-1):

cd /opt/kafka

KAFKA_CLUSTER_ID="$(bin/kafka-storage.sh random-uuid)"

echo $KAFKA_CLUSTER_IDCopy the printed UUID string. Then on each node, run (replace with your actual ID):

cd /opt/kafka

sudo bin/kafka-storage.sh format \

--config config/server.properties \

--cluster-id YOUR_CLUSTER_ID_HEREDo this once per node. If you need to re-run, you must wipe the log directory first.

- Run Kafka as a systemd Service

On each node:

sudo vi /etc/systemd/system/kafka.service[Unit]

Description=Apache Kafka 4.1 (KRaft mode)

After=network.target

[Service]

Type=simple

ExecStart=/opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

ExecStop=/opt/kafka/bin/kafka-server-stop.sh

Restart=on-failure

RestartSec=5

TimeoutStopSec=30

[Install]

WantedBy=multi-user.targetReload systemd and enable Kafka, but we’ll start it after HAProxy is ready:

sudo systemctl daemon-reload

sudo systemctl enable kafka- Install Certbot and HAProxy on Each Node

- Install Certbot (Cloudflare DNS) and HAProxy

On each node:

sudo apt update

sudo apt install -y certbot python3-certbot-dns-cloudflare

sudo add-apt-repository ppa:vbernat/haproxy-3.2 -y

sudo apt-get update

sudo apt-get install -y haproxy=3.2.*

sudo systemctl enable --now haproxy- Cloudflare DNS credentials

On each node:

mkdir -p ~/.secrets/certbot

vi ~/.secrets/certbot/cloudflare.iniPut:

dns_cloudflare_api_token = YOUR_CLOUDFLARE_API_TOKENThen:

chmod 600 ~/.secrets/certbot/cloudflare.ini- Issue Let’s Encrypt certificates (per node)

On kafka-1:

sudo certbot certonly \

--dns-cloudflare \

--dns-cloudflare-credentials ~/.secrets/certbot/cloudflare.ini \

-d kafka-1.maksonlee.comOn kafka-2:

sudo certbot certonly \

--dns-cloudflare \

--dns-cloudflare-credentials ~/.secrets/certbot/cloudflare.ini \

-d kafka-2.maksonlee.comOn kafka-3:

sudo certbot certonly \

--dns-cloudflare \

--dns-cloudflare-credentials ~/.secrets/certbot/cloudflare.ini \

-d kafka-3.maksonlee.comBundle cert + key for HAProxy, e.g. on kafka-1:

sudo mkdir -p /etc/haproxy/certs/

sudo bash -c "cat /etc/letsencrypt/live/kafka-1.maksonlee.com/fullchain.pem \

/etc/letsencrypt/live/kafka-1.maksonlee.com/privkey.pem \

> /etc/haproxy/certs/kafka-1.maksonlee.com.pem"

sudo chmod 600 /etc/haproxy/certs/kafka-1.maksonlee.com.pemRepeat with the obvious file names on kafka-2 and kafka-3.

- Configure HAProxy for Kafka (Real Config)

Here’s the actual working HAProxy config on kafka-1:

sudo vi /etc/haproxy/haproxy.cfgglobal

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

frontend fe_kafka_ssl

bind 192.168.0.94:9093 ssl crt /etc/haproxy/certs/kafka-1.maksonlee.com.pem

mode tcp

default_backend bk_kafka_plaintext

backend bk_kafka_plaintext

mode tcp

server kafka_local 127.0.0.1:9093 checkNotes:

defaultsis inmode httpbecause HAProxy may also serve HTTP somewhere else.- For Kafka we explicitly override to TCP mode in both the

frontendandbackend. - HAProxy listens on 192.168.0.94:9093 (TLS) and forwards to 127.0.0.1:9093 (Kafka’s EXTERNAL PLAINTEXT listener).

On kafka-2 and kafka-3, the config is the same except for the IP and cert file.

Reload HAProxy on each node:

sudo systemctl restart haproxy

sudo systemctl status haproxy- Automate Certificate Renewal (Per Node)

Example for kafka-1:

sudo tee /etc/letsencrypt/renewal-hooks/deploy/reload-haproxy.sh > /dev/null <<'EOF'

#!/bin/bash

cat /etc/letsencrypt/live/kafka-1.maksonlee.com/fullchain.pem \

/etc/letsencrypt/live/kafka-1.maksonlee.com/privkey.pem \

> /etc/haproxy/certs/kafka-1.maksonlee.com.pem

systemctl reload haproxy

EOF

sudo chmod +x /etc/letsencrypt/renewal-hooks/deploy/reload-haproxy.shRepeat on kafka-2 and kafka-3 with their respective hostnames and file paths.

Now whenever Certbot renews a certificate, it will rebuild the HAProxy bundle and reload HAProxy automatically.

- Start the Kafka Cluster and Verify

On each node:

sudo systemctl start kafka

sudo systemctl status kafkaTo check KRaft quorum status (run on any node):

/opt/kafka/bin/kafka-metadata-quorum.sh \

--bootstrap-controller 192.168.0.94:9094 \

describe --statusYou should see:

CurrentVoterslisting node IDs 1, 2, 3 with their192.168.0.x:9094- One node as

LeaderId

To quickly create a test topic from inside the cluster, you can use the INTERNAL listener (PLAINTEXT) from any node, e.g. on kafka-1:

/opt/kafka/bin/kafka-topics.sh \

--create \

--topic test-topic \

--bootstrap-server 192.168.0.94:9092 \

--partitions 3 \

--replication-factor 3- Test from a Client (Python, Confluent Kafka)

From an external client machine, Kafka is reachable over TLS on:

kafka-1.maksonlee.com:9093

kafka-2.maksonlee.com:9093

kafka-3.maksonlee.com:9093Because we used Let’s Encrypt, the system CA store is enough; no extra cert config is required for confluent-kafka.

Producer

from confluent_kafka import Producer

conf = {

'bootstrap.servers': 'kafka-1.maksonlee.com:9093,'

'kafka-2.maksonlee.com:9093,'

'kafka-3.maksonlee.com:9093',

'security.protocol': 'ssl',

}

producer = Producer(conf)

def delivery_report(err, msg):

if err is not None:

print('Delivery failed:', err)

else:

print(f'Message delivered to {msg.topic()} [{msg.partition()}] @ offset {msg.offset()}')

producer.produce('test-topic', key='key1', value='Hello Kafka 3-node cluster!', callback=delivery_report)

producer.flush()Consumer

from confluent_kafka import Consumer

conf = {

'bootstrap.servers': 'kafka-1.maksonlee.com:9093,'

'kafka-2.maksonlee.com:9093,'

'kafka-3.maksonlee.com:9093',

'security.protocol': 'ssl',

'group.id': 'test-group',

'auto.offset.reset': 'earliest',

}

consumer = Consumer(conf)

consumer.subscribe(['test-topic'])

print('Waiting for messages...')

msg = consumer.poll(10.0)

if msg is None:

print("No message received.")

elif msg.error():

print("Error:", msg.error())

else:

print(f"Received: {msg.value().decode('utf-8')} from partition {msg.partition()} @ offset {msg.offset()}")

consumer.close()- Notes on TLS and mTLS

Just like the single-node setup:

| Aspect | Status |

|---|---|

| TLS encryption between client ↔ HAProxy | ✅ Yes |

| Kafka sees TLS / client certificates | ❌ No |

| mTLS (client cert auth at Kafka) | ❌ No |

TLS is terminated at HAProxy. Kafka only ever speaks PLAINTEXT to HAProxy (EXTERNAL://127.0.0.1:9093) and to other brokers (INTERNAL://192.168.0.x:9092).

To implement end-to-end mTLS at Kafka itself, you’d need to:

- Let Kafka terminate TLS directly on one of its listeners

- Either remove HAProxy from the data path or run it in

mode tcppass-through (no TLS termination)

That’s a different architecture and not covered in this post.

Did this guide save you time?

Support this site