In this post, we’ll walk through setting up Ceph RGW (RADOS Gateway) multisite using two separate clusters on Ubuntu 24.04. The goal is to replicate S3-compatible object storage between independent zones with TLS termination via HAProxy and automatic certificates from Let’s Encrypt.

We’ll configure:

- Two Ceph clusters (zone1 and zone2)

- Multisite realm, zonegroup, and zone configuration

- TLS via HAProxy and DNS-validated Let’s Encrypt certs

- RGW replication for both metadata and objects

Why Use Ceph RGW Multisite?

When you’re operating across regions, a single Ceph cluster might not cut it. You may need your object storage to exist in multiple datacenters, with each site able to:

- Serve S3 requests locally for lower latency

- Keep running even if another site goes offline

- Synchronize buckets and objects automatically

That’s exactly what Ceph RGW Multisite enables.

Geo-Distributed Object Storage

Each Ceph zone can exist in a different region (e.g., Asia and Europe), providing local read/write access while replicating data across sites.

Bidirectional Replication

Objects uploaded in one zone can be replicated to another, ensuring eventual consistency across all participating clusters.

Fault Tolerance

Multisite setups let one zone function independently if another goes down. When recovery happens, replication resumes automatically.

Cluster Overview

| Hostname | IP Address | Role |

|---|---|---|

ceph-zone1 | 192.168.0.82 | MON, MGR, OSD, RGW (Primary Zone) |

ceph-zone2 | 192.168.0.83 | MON, MGR, OSD, RGW (Secondary Zone) |

- Bootstrap Ceph on Both Zones

On both ceph-zone1 and ceph-zone2:

sudo apt update

sudo apt install -y cephadm

sudo cephadm bootstrap \

--mon-ip <your-zone-ip> \

--initial-dashboard-user admin \

--initial-dashboard-password admin123Disable HTTPS on the dashboard and configure basic OSD policy:

sudo cephadm shell

ceph config set mgr mgr/dashboard/ssl false

ceph mgr module disable dashboard

ceph mgr module enable dashboard

ceph config set global osd_crush_chooseleaf_type 0

ceph config set global osd_pool_default_size 1

ceph config set global osd_pool_default_min_size 1

ceph orch daemon add osd <hostname>:/dev/sdb

exit- Set Up HAProxy and Let’s Encrypt TLS

Install HAProxy and Certbot:

sudo add-apt-repository ppa:vbernat/haproxy-3.2 -y

sudo apt update

sudo apt install haproxy=3.2.* certbot python3-certbot-dns-cloudflareGenerate certificate using DNS challenge:

mkdir -p ~/.secrets/certbot

vi ~/.secrets/certbot/cloudflare.inidns_cloudflare_api_token = YOUR_CLOUDFLARE_API_TOKENchmod 600 ~/.secrets/certbot/cloudflare.inisudo certbot certonly \

--dns-cloudflare \

--dns-cloudflare-credentials ~/.secrets/certbot/cloudflare.ini \

-d <hostname>.maksonlee.comConcatenate fullchain and key into HAProxy-friendly format:

sudo mkdir -p /etc/haproxy/certs/

sudo bash -c "cat /etc/letsencrypt/live/<hostname>.maksonlee.com/fullchain.pem \

/etc/letsencrypt/live/<hostname>.maksonlee.com/privkey.pem \

> /etc/haproxy/certs/<hostname>.maksonlee.com.pem"

sudo chmod 600 /etc/haproxy/certs/<hostname>.maksonlee.com.pemEdit /etc/haproxy/haproxy.cfg and add the following:

# S3 over 443

frontend fe_s3_https

bind *:443 ssl crt /etc/haproxy/certs/<hostname>.maksonlee.com.pem

mode http

default_backend be_rgw_s3

# Dashboard over 8443

frontend fe_dashboard_https

bind *:8443 ssl crt /etc/haproxy/certs/<hostname>.maksonlee.com.pem

mode http

default_backend be_dashboard

backend be_rgw_s3

mode http

server rgw1 <your-zone-ip>:8081 check

backend be_dashboard

mode http

server ceph1 <your-zone-ip>:8080 check- Configure RGW Multisite on Zone 1 (Primary)

Enter the Ceph shell:

sudo cephadm shellCreate Realm, Zonegroup, and Zone

radosgw-admin realm create --rgw-realm=maksonlee-realm --default

radosgw-admin zonegroup create \

--rgw-zonegroup=maksonlee-zg \

--endpoints=https://ceph-zone1.maksonlee.com \

--rgw-realm=maksonlee-realm \

--master --default

radosgw-admin zone create \

--rgw-zonegroup=maksonlee-zg \

--rgw-zone=zone1 \

--endpoints=https://ceph-zone1.maksonlee.com \

--master --defaultClean Up Default Configuration

radosgw-admin zonegroup delete --rgw-zonegroup=default --rgw-zone=default || true

radosgw-admin period update --commit

radosgw-admin zone delete --rgw-zone=default || true

radosgw-admin period update --commit

radosgw-admin zonegroup delete --rgw-zonegroup=default || true

radosgw-admin period update --commitDelete Default Pools

ceph osd pool rm default.rgw.control default.rgw.control --yes-i-really-really-mean-it

ceph osd pool rm default.rgw.data.root default.rgw.data.root --yes-i-really-really-mean-it

ceph osd pool rm default.rgw.gc default.rgw.gc --yes-i-really-really-mean-it

ceph osd pool rm default.rgw.log default.rgw.log --yes-i-really-really-mean-it

ceph osd pool rm default.rgw.users.uid default.rgw.users.uid --yes-i-really-really-mean-itCreate Synchronization User and Get Credentials

radosgw-admin user create --uid="synchronization-user" \

--display-name="Synchronization User" --systemNow retrieve the access/secret keys:

radosgw-admin user info --uid="synchronization-user"Output:

"access_key": "RSIQR09R2BY8PRJP8OPT",

"secret_key": "qItSZfEKWysDBDDzGZFBdkkwUATlQSmtKV2t0e6A"Assign Credentials to the Zone

radosgw-admin zone modify --rgw-zone=zone1 \

--access-key=RSIQR09R2BY8PRJP8OPT \

--secret=qItSZfEKWysDBDDzGZFBdkkwUATlQSmtKV2t0e6A

radosgw-admin period update --commitDeploy RGW Daemon

ceph orch apply rgw zone1.rgw --placement="ceph-zone1" --port=8081- Configure RGW on Zone 2 (Secondary)

sudo cephadm shellPull Realm and Join Multisite

radosgw-admin realm pull \

--url=https://ceph-zone1.maksonlee.com \

--access-key=RSIQR09R2BY8PRJP8OPT \

--secret=qItSZfEKWysDBDDzGZFBdkkwUATlQSmtKV2t0e6A

radosgw-admin realm default --rgw-realm=maksonlee-realmCreate the zone:

radosgw-admin zone create \

--rgw-zonegroup=maksonlee-zg \

--rgw-zone=zone2 \

--access-key=RSIQR09R2BY8PRJP8OPT \

--secret=qItSZfEKWysDBDDzGZFBdkkwUATlQSmtKV2t0e6A \

--endpoints=https://ceph-zone2.maksonlee.comDelete default zone and pools:

radosgw-admin zone delete --rgw-zone=default || true

ceph osd pool rm default.rgw.control default.rgw.control --yes-i-really-really-mean-it

ceph osd pool rm default.rgw.data.root default.rgw.data.root --yes-i-really-really-mean-it

ceph osd pool rm default.rgw.gc default.rgw.gc --yes-i-really-really-mean-it

ceph osd pool rm default.rgw.log default.rgw.log --yes-i-really-really-mean-it

ceph osd pool rm default.rgw.users.uid default.rgw.users.uid --yes-i-really-really-mean-itCommit the changes:

radosgw-admin period update --commitDeploy RGW:

ceph orch apply rgw zone2.rgw \

--realm=maksonlee-realm \

--zone=zone2 \

--placement="ceph-zone2" --port=8081- Verify Synchronization Status

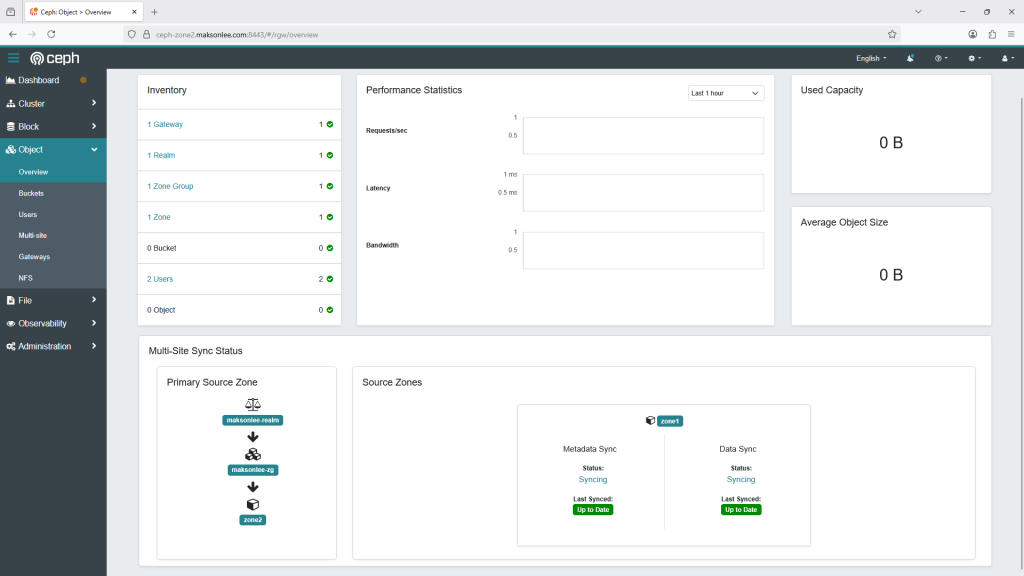

From ceph-zone2, check:

radosgw-admin sync statusYou should see synchronization of metadata and objects between the zones. Congratulations, you now have a fully functioning multisite Ceph RGW setup.

realm b80c1943-648b-4a01-9e12-2293af9d05dd (maksonlee-realm)

zonegroup 05c94c56-66e5-4929-97cc-ed354a42a71e (maksonlee-zg)

zone 8bb90ca8-9d7f-4ea7-8d8e-c473119703a1 (zone2)

current time 2025-07-29T12:11:50Z

zonegroup features enabled: notification_v2,resharding

disabled: compress-encrypted

metadata sync syncing

full sync: 0/64 shards

incremental sync: 64/64 shards

metadata is caught up with master

data sync source: f6bd32bb-319d-4edd-b939-1fb859444fc1 (zone1)

syncing

full sync: 0/128 shards

incremental sync: 128/128 shards

data is caught up with source

Did this guide save you time?

Support this site