This follow-up shows the cleanest, minimal path to put a Libvirt/QEMU VM disk on Ceph RBD and create the VM with Cockpit.

This post is a follow-up to:

If you finished those posts, the only extra package needed on the KVM/libvirt host is:

sudo apt install -y libvirt-daemon-driver-storage-rbd

sudo systemctl restart libvirtd # important: loads the RBD backendWhat you’ll end up with

- A Ceph RBD pool ready for VM disks

- A

client.libvirtuser + key (used by libvirt as a secret) - A Libvirt storage pool mapped to your Ceph RBD pool

- A KVM VM created with its disk on RBD

Tip: Replace placeholders like <pool-name>, <mon-ip>, <SECRET_UUID> with your values.

- Create an RBD pool (on a Ceph host)

ceph osd pool create <pool-name> 8

ceph osd pool application enable <pool-name> rbd

rbd pool init <pool-name>- Create the libvirt user and copy its key (Ceph host)

ceph auth get-or-create client.libvirt \

mon 'allow r' \

osd 'allow rwx pool=<pool-name>'

# Immediately print the Base64 key for this user and copy it:

ceph auth get-key client.libvirtYou’ll paste this Base64 key later when creating the libvirt secret on the KVM host.

- Create a libvirt secret with the Base64 key (KVM host)

In libvirt/QEMU, the username/id is libvirt (no client. prefix).

SECRET_UUID=$(uuidgen)

CEPH_KEY_B64='<paste the Base64 from: ceph auth get-key client.libvirt>'

cat >/tmp/ceph-secret.xml <<EOF

<secret ephemeral='no' private='no'>

<uuid>${SECRET_UUID}</uuid>

<usage type='ceph'>

<name>client.libvirt secret</name>

</usage>

</secret>

EOF

sudo virsh secret-define /tmp/ceph-secret.xml

# Works-first: setting via CLI will warn it's insecure; that's OK for now

sudo virsh secret-set-value --secret ${SECRET_UUID} --base64 "${CEPH_KEY_B64}"

sudo virsh secret-listAbout the warning you’ll see:

<code>virsh secret-set-value</code> often printserror: Passing secret value as command-line argument is insecure!

Secret value setThe first line is just a warning about using CLI args for secrets. The second line confirms it succeeded.

- Define a Ceph RBD storage pool in libvirt (single MON)

Point it at your single Ceph node’s MON IP. If you omit the port, libvirt defaults to msgr1 :6789, which is reliable for a first run.

MON=<mon-ip> # e.g., 192.168.0.82

POOL_NAME=<pool-name> # e.g., vm

sudo virsh pool-define-as ceph-vm rbd \

--source-host ${MON} \

--source-name ${POOL_NAME} \

--auth-type ceph \

--auth-username libvirt \

--secret-uuid ${SECRET_UUID}

sudo virsh pool-start ceph-vm

sudo virsh pool-autostart ceph-vm

virsh pool-info ceph-vmExpected XML (what the CLI produces):

<pool type='rbd'>

<name>ceph-vm</name>

<source>

<host name='<your-ceph-node-ip>'/> <!-- no port => default 6789 (msgr1) -->

<name><pool-name></name>

<auth type='ceph' username='libvirt'>

<secret uuid='<SECRET_UUID>'/>

</auth>

</source>

</pool>- Pre-create the RBD volume (so Cockpit can select it)

Some Cockpit builds show Create new raw volume in the VM wizard, but that action is file-backed (dir pool), not RBD. For RBD, create the volume first, then select it:

sudo virsh vol-create-as ceph-vm myvm-root 10G --format raw

sudo virsh pool-refresh ceph-vm # if Cockpit doesn’t see it yetUse raw on RBD (don’t layer qcow2 on top of RBD unless you truly need qcow2 features).

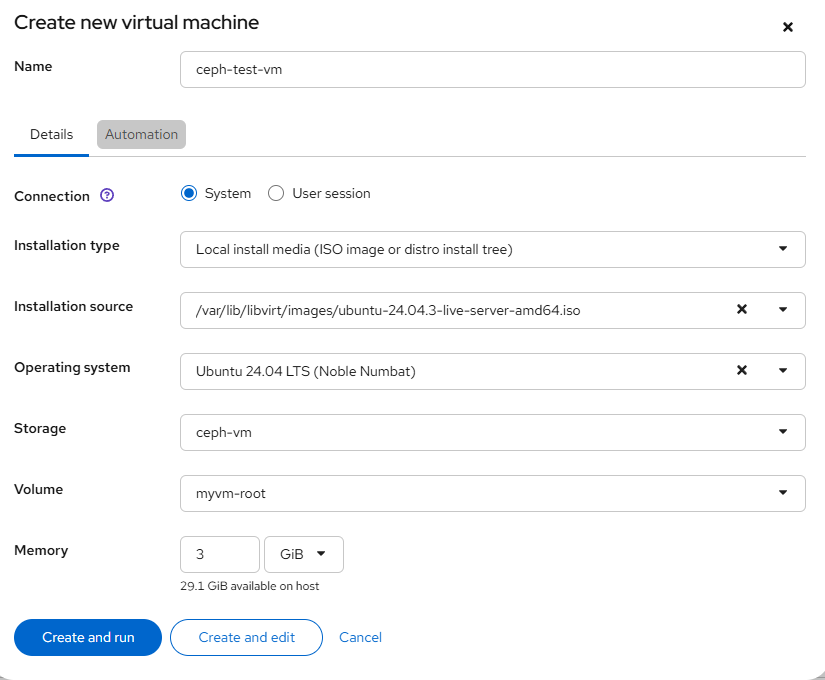

- Create the VM in Cockpit using the RBD volume

That’s it — a tidy, repeatable path to build a KVM VM backed by Ceph RBD using Cockpit, tailored for a single-node Ceph and requiring just one extra package on the KVM host.

Did this guide save you time?

Support this site