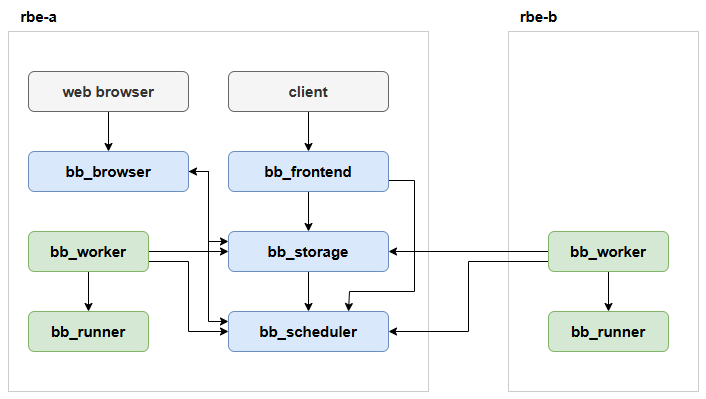

Topology

- rbe-a.maksonlee.com = storage (CAS/AC) + frontend (REAPI) + scheduler + browser + worker + runner

- rbe-b.maksonlee.com = worker + runner

What you get

- Build from source for five binaries: bb_storage, bb_scheduler, bb_worker, bb_runner, bb_browser

- AOSP-sane sizes for CAS/AC and worker file pools

- Minimal Jsonnet configs for bare metal, fixed hostnames, and an empty instance name

- systemd units to manage everything

grpcurland HTTP checks to verify (CLI-only)

TL;DR

Build the five binaries with Bazelisk, create storage/worker file pools, drop the Jsonnet files under /etc/buildbarn/, install the systemd services, start bb-storage → scheduler → frontend → browser → runner → worker, and point the AOSP RBE client (reclient/reproxy) at rbe-a.maksonlee.com:8980.

- Prereqs (both hosts)

sudo apt update

sudo apt-get install -y \

git gnupg flex bison build-essential zip curl zlib1g-dev libc6-dev-i386 \

x11proto-core-dev libx11-dev lib32z1-dev libgl1-mesa-dev libxml2-utils \

xsltproc unzip fontconfigInstall Bazelisk (used as bazel)

curl -LO https://github.com/bazelbuild/bazelisk/releases/latest/download/bazelisk-linux-amd64

chmod +x bazelisk-linux-amd64

sudo mv bazelisk-linux-amd64 /usr/local/bin/bazel

bazel versionInstall grpcurl

VERSION=v1.9.3

curl -L "https://github.com/fullstorydev/grpcurl/releases/download/${VERSION}/grpcurl_${VERSION#v}_linux_x86_64.tar.gz" -o /tmp/grpcurl.tgz

tar -C /tmp -xzf /tmp/grpcurl.tgz grpcurl

sudo install -m0755 /tmp/grpcurl /usr/local/bin/grpcurl

grpcurl --version- Get sources & build binaries from source

mkdir -p ~/src && cd ~/src

git clone https://github.com/buildbarn/bb-remote-execution.git

git clone https://github.com/buildbarn/bb-storage.git

git clone https://github.com/buildbarn/bb-browser.gitBuild bb-remote-execution (scheduler/worker/runner)

cd ~/src/bb-remote-execution

bazel build //cmd/bb_scheduler:bb_scheduler //cmd/bb_worker:bb_worker //cmd/bb_runner:bb_runner

SCHED_BIN="$(bazel cquery --output=files //cmd/bb_scheduler:bb_scheduler | head -n1)"

WORKER_BIN="$(bazel cquery --output=files //cmd/bb_worker:bb_worker | head -n1)"

RUNNER_BIN="$(bazel cquery --output=files //cmd/bb_runner:bb_runner | head -n1)"

sudo install -m0755 "$SCHED_BIN" /usr/local/bin/bb_scheduler

sudo install -m0755 "$WORKER_BIN" /usr/local/bin/bb_worker

sudo install -m0755 "$RUNNER_BIN" /usr/local/bin/bb_runnerBuild bb-storage (provides storage and also the frontend when run with a different config)

cd ~/src/bb-storage

bazel build //cmd/bb_storage:bb_storage

STORAGE_BIN="$(bazel cquery --output=files //cmd/bb_storage:bb_storage | head -n1)"

sudo install -m0755 "$STORAGE_BIN" /usr/local/bin/bb_storageBuild bb-browser (optional UI)

cd ~/src/bb-browser

bazel build //cmd/bb_browser:bb_browser

BROWSER_BIN="$(bazel cquery --output=files //cmd/bb_browser:bb_browser | head -n1)"

sudo install -m0755 "$BROWSER_BIN" /usr/local/bin/bb_browserSanity check:

for b in bb_storage bb_scheduler bb_worker bb_runner bb_browser; do command -v $b && $b --help >/dev/null 2>&1 && echo "OK: $b"; done- Buildbarn user, directories & AOSP-sized storage

Both hosts (common)

sudo useradd -r -m -d /var/lib/buildbarn -s /usr/sbin/nologin buildbarn || true

sudo mkdir -p /etc/buildbarn \

/var/lib/buildbarn/{worker/{build,cache},logs}

sudo chown -R buildbarn:buildbarn /etc/buildbarn /var/lib/buildbarnrbe-a only (CAS/AC live here)

sudo mkdir -p \

/var/lib/buildbarn/storage-cas/persistent_state \

/var/lib/buildbarn/storage-ac/persistent_state

sudo chown -R buildbarn:buildbarn /var/lib/buildbarn- Jsonnet configs

These are fixed for bare metal, your hostnames, and an empty instance name.

/etc/buildbarn/common.libsonnet(both hosts)

{

globalWithDiagnosticsHttpServer(listenAddress): {

diagnosticsHttpServer: {

httpServers: [{ listenAddresses: [listenAddress], authenticationPolicy: { allow: {} } }],

enablePrometheus: true, enablePprof: true, enableActiveSpans: true,

},

},

blobstore: {

contentAddressableStorage: { grpc: { address: 'rbe-a.maksonlee.com:8981' } },

actionCache: {

completenessChecking: {

backend: { grpc: { address: 'rbe-a.maksonlee.com:8981' } },

maximumTotalTreeSizeBytes: 64 * 1024 * 1024,

},

},

},

browserUrl: 'http://rbe-a.maksonlee.com:7984',

maximumMessageSizeBytes: 2 * 1024 * 1024,

}/etc/buildbarn/storage.jsonnet(rbe-a)

local common = import 'common.libsonnet';

{

grpcServers: [{ listenAddresses: [':8981'], authenticationPolicy: { allow: {} } }],

maximumMessageSizeBytes: common.maximumMessageSizeBytes,

global: common.globalWithDiagnosticsHttpServer(':9981'),

contentAddressableStorage: {

backend: {

'local': {

keyLocationMapOnBlockDevice: { file: { path: '/var/lib/buildbarn/storage-cas/key_location_map', sizeBytes: 400 * 1024 * 1024 } },

keyLocationMapMaximumGetAttempts: 16,

keyLocationMapMaximumPutAttempts: 64,

oldBlocks: 8, currentBlocks: 24, newBlocks: 3,

blocksOnBlockDevice: {

source: { file: { path: '/var/lib/buildbarn/storage-cas/blocks', sizeBytes: 700 * 1024 * 1024 * 1024 } },

spareBlocks: 3,

},

persistent: { stateDirectoryPath: '/var/lib/buildbarn/storage-cas/persistent_state', minimumEpochInterval: '300s' },

},

},

getAuthorizer: { allow: {} }, putAuthorizer: { allow: {} }, findMissingAuthorizer: { allow: {} },

},

actionCache: {

backend: {

'local': {

keyLocationMapOnBlockDevice: { file: { path: '/var/lib/buildbarn/storage-ac/key_location_map', sizeBytes: 128 * 1024 * 1024 } },

keyLocationMapMaximumGetAttempts: 16,

keyLocationMapMaximumPutAttempts: 64,

oldBlocks: 8, currentBlocks: 24, newBlocks: 1,

blocksOnBlockDevice: {

source: { file: { path: '/var/lib/buildbarn/storage-ac/blocks', sizeBytes: 8 * 1024 * 1024 * 1024 } },

spareBlocks: 3,

},

persistent: { stateDirectoryPath: '/var/lib/buildbarn/storage-ac/persistent_state', minimumEpochInterval: '300s' },

},

},

getAuthorizer: { allow: {} }, putAuthorizer: { allow: {} },

},

}/etc/buildbarn/frontend.jsonnet(rbe-a)

local common = import 'common.libsonnet';

{

grpcServers: [{ listenAddresses: [':8980'], authenticationPolicy: { allow: {} } }],

schedulers: {

'': { // empty instance name

endpoint: {

address: 'rbe-a.maksonlee.com:8982',

addMetadataJmespathExpression: {

expression: |||

{

"build.bazel.remote.execution.v2.requestmetadata-bin":

incomingGRPCMetadata."build.bazel.remote.execution.v2.requestmetadata-bin"

}

|||,

},

},

},

},

maximumMessageSizeBytes: common.maximumMessageSizeBytes,

global: common.globalWithDiagnosticsHttpServer(':9980'),

contentAddressableStorage: {

backend: common.blobstore.contentAddressableStorage,

getAuthorizer: { allow: {} }, putAuthorizer: { allow: {} }, findMissingAuthorizer: { allow: {} },

},

actionCache: {

backend: common.blobstore.actionCache,

getAuthorizer: { allow: {} }, putAuthorizer: { allow: {} },

},

executeAuthorizer: { allow: {} },

}/etc/buildbarn/scheduler.jsonnet(rbe-a)

About platform matching (single runner for all “platforms”)

We intentionally ignore client-provided platform properties so every request lands in the same platform queue and uses the same runner, because this cluster only builds AOSP.

local common = import 'common.libsonnet';

{

adminHttpServers: [{ listenAddresses: [':7982'], authenticationPolicy: { allow: {} } }],

clientGrpcServers: [{ listenAddresses: [':8982'], authenticationPolicy: { allow: {} } }],

workerGrpcServers: [{ listenAddresses: [':8983'], authenticationPolicy: { allow: {} } }],

browserUrl: common.browserUrl,

contentAddressableStorage: common.blobstore.contentAddressableStorage,

maximumMessageSizeBytes: common.maximumMessageSizeBytes,

global: common.globalWithDiagnosticsHttpServer(':9982'),

executeAuthorizer: { allow: {} },

modifyDrainsAuthorizer: { allow: {} },

killOperationsAuthorizer: { allow: {} },

synchronizeAuthorizer: { allow: {} },

actionRouter: {

simple: {

platformKeyExtractor: { static: { properties: [] } },

invocationKeyExtractors: [{ correlatedInvocationsId: {} }, { toolInvocationId: {} }],

initialSizeClassAnalyzer: {

defaultExecutionTimeout: '1800s',

maximumExecutionTimeout: '7200s',

},

},

},

platformQueueWithNoWorkersTimeout: '900s',

}/etc/buildbarn/browser.jsonnet(rbe-a)

local common = import 'common.libsonnet';

{

blobstore: common.blobstore,

maximumMessageSizeBytes: common.maximumMessageSizeBytes,

httpServers: [{ listenAddresses: [':7984'], authenticationPolicy: { allow: {} } }],

global: common.globalWithDiagnosticsHttpServer(':9984'),

authorizer: { allow: {} },

requestMetadataLinksJmespathExpression: { expression: '`{}`' },

}/etc/buildbarn/runner.jsonnet(both hosts)

local common = import 'common.libsonnet';

{

buildDirectoryPath: '/var/lib/buildbarn/worker/build',

global: common.globalWithDiagnosticsHttpServer(':9987'),

grpcServers: [{ listenPaths: ['/var/lib/buildbarn/worker/runner.sock'], authenticationPolicy: { allow: {} } }],

}/etc/buildbarn/worker.jsonnet(both hosts)

local common = import 'common.libsonnet';

{

blobstore: common.blobstore,

browserUrl: common.browserUrl,

maximumMessageSizeBytes: common.maximumMessageSizeBytes,

scheduler: { address: 'rbe-a.maksonlee.com:8983' },

global: common.globalWithDiagnosticsHttpServer(':9986'),

buildDirectories: [

{

native: {

buildDirectoryPath: '/var/lib/buildbarn/worker/build',

cacheDirectoryPath: '/var/lib/buildbarn/worker/cache',

maximumCacheFileCount: 10000,

maximumCacheSizeBytes: 4 * 1024 * 1024 * 1024,

cacheReplacementPolicy: 'LEAST_RECENTLY_USED',

},

runners: [

{

endpoint: { address: 'unix:/var/lib/buildbarn/worker/runner.sock' },

concurrency: 4,

maximumFilePoolFileCount: 10000,

maximumFilePoolSizeBytes: 32 * 1024 * 1024 * 1024,

platform: {},

workerId: { hostname: 'rbe-(a|b).maksonlee.com' },

}

],

},

],

filePool: {

blockDevice: { file: { path: '/var/lib/buildbarn/worker/filepool', sizeBytes: 32 * 1024 * 1024 * 1024 } }

},

inputDownloadConcurrency: 10,

outputUploadConcurrency: 11,

directoryCache: { maximumCount: 1000, maximumSizeBytes: 1000 * 1024, cacheReplacementPolicy: 'LEAST_RECENTLY_USED' },

}- systemd units

/etc/systemd/system/bb-storage.service(rbe-a)

[Unit]

Description=Buildbarn Storage (CAS/AC)

After=network-online.target

[Service]

User=buildbarn

WorkingDirectory=/var/lib/buildbarn

ExecStart=/usr/local/bin/bb_storage /etc/buildbarn/storage.jsonnet

Restart=on-failure

[Install]

WantedBy=multi-user.target/etc/systemd/system/bb-frontend.service(rbe-a)

[Unit]

Description=Buildbarn Frontend (REAPI)

After=bb-storage.service bb-scheduler.service

[Service]

User=buildbarn

WorkingDirectory=/var/lib/buildbarn

ExecStart=/usr/local/bin/bb_storage /etc/buildbarn/frontend.jsonnet

Restart=on-failure

[Install]

WantedBy=multi-user.target/etc/systemd/system/bb-scheduler.service(rbe-a)

[Unit]

Description=Buildbarn Scheduler

After=bb-storage.service

[Service]

User=buildbarn

WorkingDirectory=/var/lib/buildbarn

ExecStart=/usr/local/bin/bb_scheduler /etc/buildbarn/scheduler.jsonnet

Restart=on-failure

[Install]

WantedBy=multi-user.target/etc/systemd/system/bb-browser.service(rbe-a)

[Unit]

Description=Buildbarn Browser

After=bb-storage.service

[Service]

User=buildbarn

WorkingDirectory=/var/lib/buildbarn

ExecStart=/usr/local/bin/bb_browser /etc/buildbarn/browser.jsonnet

Restart=on-failure

[Install]

WantedBy=multi-user.target/etc/systemd/system/bb-runner.service(both hosts)

[Unit]

Description=Buildbarn Runner

After=network-online.target

[Service]

User=buildbarn

WorkingDirectory=/var/lib/buildbarn

ExecStart=/usr/local/bin/bb_runner /etc/buildbarn/runner.jsonnet

Restart=on-failure

[Install]

WantedBy=multi-user.target/etc/systemd/system/bb-worker.service(both hosts)

[Unit]

Description=Buildbarn Worker

After=bb-scheduler.service bb-runner.service

[Service]

User=buildbarn

WorkingDirectory=/var/lib/buildbarn

ExecStart=/usr/local/bin/bb_worker /etc/buildbarn/worker.jsonnet

Restart=on-failure

[Install]

WantedBy=multi-user.targetEnable/start:

# rbe-a

sudo systemctl daemon-reload

sudo systemctl enable --now bb-storage bb-scheduler bb-frontend bb-browser bb-runner bb-worker

sudo systemctl --no-pager --full status bb-storage bb-scheduler bb-frontend bb-browser bb-runner bb-worker

# rbe-b

sudo systemctl daemon-reload

sudo systemctl enable --now bb-runner bb-worker

sudo systemctl --no-pager --full status bb-runner bb-worker- Quick verify

# Frontend (REAPI) should list services

grpcurl -plaintext rbe-a.maksonlee.com:8980 list | head

# Scheduler admin page returns HTML (expect HTTP/1.1 200 OK)

curl -fsSI http://rbe-a.maksonlee.com:7982/ | head -n 5

# Browser UI headers without launching a browser

curl -fsSI http://rbe-a.maksonlee.com:7984/ | head -n 5

# Storage gRPC port is listening (expect LISTEN on :8981)

ss -ltnp | awk '$4 ~ /:8981$/'

# Prometheus metrics (quiet)

curl -fs http://rbe-a.maksonlee.com:9980/metrics | head -n 3

curl -fs http://rbe-a.maksonlee.com:9982/metrics | head -n 3

curl -fs http://rbe-a.maksonlee.com:9984/metrics | head -n 3Optional: capability check for the empty instance name

grpcurl -plaintext -d '{"instanceName":""}' \

rbe-a.maksonlee.com:8980 \

build.bazel.remote.execution.v2.Capabilities/GetCapabilities- Build AOSP

First, set the environment variables (note the empty instance name):

# Enable RBE usage in the build

export USE_RBE=1

# Do not authenticate to the RBE server (Buildfarm-style setups)

export RBE_use_rpc_credentials=false

# The endpoint of the RBE server (Buildbarn Frontend)

export RBE_service=rbe-a.maksonlee.com:8980

# Use the same frontend for Remote Cache

export RBE_remote_cache=rbe-a.maksonlee.com:8980

# Instance name = empty string (or unset this variable entirely)

export RBE_instance=

# No auth / no TLS to the RBE service

export RBE_service_no_auth=true

export RBE_service_no_security=true

# Parallelism for Ninja remote actions (tune to RBE CPU cores)

export NINJA_REMOTE_NUM_JOBS=24

# Enable RBE for toolchains/features

export RBE_ABI_DUMPER=1

export RBE_ABI_LINKER=1

export RBE_CLANG_TIDY=1

export RBE_CXX_LINKS=1

export RBE_D8=1

export RBE_JAVAC=1

export RBE_METALAVA=1

export RBE_R8=1

export RBE_SIGNAPK=1

export RBE_TURBINE=1

export RBE_ZIP=1

# Execution strategies (remote with local fallback)

export RBE_ABI_DUMPER_EXEC_STRATEGY=remote_local_fallback

export RBE_ABI_LINKER_EXEC_STRATEGY=remote_local_fallback

export RBE_CLANG_TIDY_EXEC_STRATEGY=remote_local_fallback

export RBE_CXX_EXEC_STRATEGY=remote_local_fallback

export RBE_CXX_LINKS_EXEC_STRATEGY=remote_local_fallback

export RBE_D8_EXEC_STRATEGY=remote_local_fallback

export RBE_JAR_EXEC_STRATEGY=remote_local_fallback

export RBE_JAVAC_EXEC_STRATEGY=remote_local_fallback

export RBE_METALAVA_EXEC_STRATEGY=remote_local_fallback

export RBE_R8_EXEC_STRATEGY=remote_local_fallback

export RBE_SIGNAPK_EXEC_STRATEGY=remote_local_fallback

export RBE_TURBINE_EXEC_STRATEGY=remote_local_fallback

export RBE_ZIP_EXEC_STRATEGY=remote_local_fallback

export RBE_proxy_log_dir=/tmp/reproxyFirst build:

mkdir ~/aosp-15 && cd ~/aosp-15

repo init -u https://android.googlesource.com/platform/manifest -b android-15.0.0_r30

repo sync

source build/envsetup.sh

lunch aosp_arm64-trunk_staging-userdebug

# Kick off the build:

m -j24Sample tail:

RBE Stats: down 29.09 GB, up 46.87 GB, 94384 remote executions, 113 local fallbacks

#### build completed successfully (03:42:10 (hh:mm:ss)) ####Disk usage example:

cd /var/lib/buildbarn

du -h --max-depth=171G ./storage-cas

4.0K ./logs

1.4G ./worker

210M ./storage-ac

72G .Second build,

m clean

m -j24Sample tail:

RBE Stats: down 58.17 GB, up 46.87 GB, 94354 cache hits, 94414 remote executions, 226 local fallbacks

#### build completed successfully (01:42:20 (hh:mm:ss)) ####Results & Sizing Notes

- Remote exec volume:

94384remote actions with only113local fallbacks → scheduling + platform matching are healthy. - Bandwidth: 29.09 GB down / 44.64 GB up is typical for a first clean build; uploads drop on incremental builds as CAS hits rise.

- Disk usage (actual, not sparse):

storage-cas~ 71 G after the first clean build (normal for AOSP)storage-ac~ 210 M (AC remains small)worker~ 1.4 G

Notes & tips

- Instance name: This guide uses the empty instance name (

""). Use the same name infrontend.jsonnet(theschedulerskey is"") and setRBE_instance=(or simply unset the variable). - Storage sizing: If you change CAS/AC file sizes, keep any pre-allocation (

fallocate) andsizeBytesin sync. - Platforms: This cluster intentionally ignores client-provided platform properties. The scheduler uses

platformKeyExtractor: { static: { properties: [] } }, so every action lands in the same queue and uses the same runner. The worker advertises a single runner withplatform: {}. - Security: All authorizers are

allow: {}here for simplicity. Lock down before exposing beyond a trusted network.

Did this guide save you time?

Support this site