When deploying Ceph RGW Multisite, it’s common to span zones across different regions—Asia, Europe, America—connected by wide-area networks. These connections often introduce latency, bandwidth limits, and packet loss.

To emulate this in a lab or VM environment, we can use Linux’s tc tool to simulate:

- Geographically realistic latency (e.g., 200ms)

- Bandwidth caps on inter-zone replication

- Selective shaping only between specific IPs

This post walks you through simulating a slow link between two Ceph RGW zones using tc, prio, netem, and tbf.

Environment Setup

| Host | IP Address | Interface |

|---|---|---|

| ceph-zone1 | 192.168.0.82 | ens32 |

| ceph-zone2 | 192.168.0.83 | ens32 |

We’ll simulate network delay and bandwidth limit only for traffic between ceph-zone1 and ceph-zone2.

Objective: Simulate a Geo-Distributed Link

| Simulated Condition | Real-World Analogy |

|---|---|

| 200ms latency | Asia ↔ Europe RTT |

| 5 Mbit/s bandwidth cap | Cross-regional backbone |

| Directional shaping | From zone1 to zone2 only |

| Others unaffected | Dashboard, SSH, etc. remain fast |

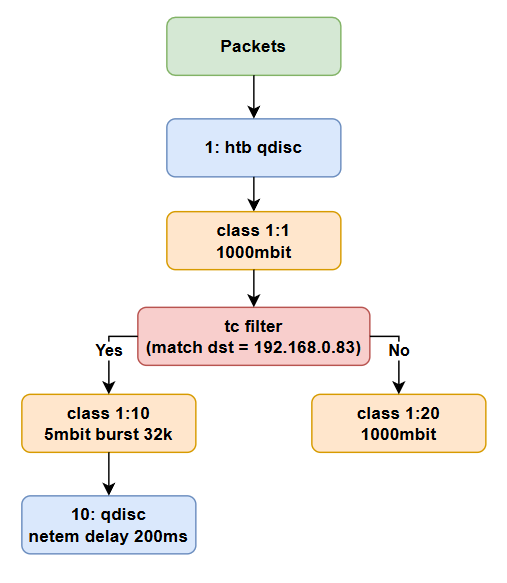

Packet Flow Diagram

This flowchart illustrates the shaping logic:

- Add Root HTB Qdisc and Classes

# Root HTB with default full-speed class

sudo tc qdisc add dev ens32 root handle 1: htb default 20

# Parent class: full link capacity

sudo tc class add dev ens32 parent 1: classid 1:1 htb rate 1000mbit

# Slow class for zone2

sudo tc class add dev ens32 parent 1:1 classid 1:10 htb rate 5mbit burst 32k

# Default class for all other traffic

sudo tc class add dev ens32 parent 1:1 classid 1:20 htb rate 1000mbit- Add Latency with

netem

sudo tc qdisc add dev ens32 parent 1:10 handle 10: netem delay 200msThis adds 200ms RTT delay to all traffic classified into the 1:10 class.

- Match Only Inter-Zone Traffic

sudo tc filter add dev ens32 protocol ip parent 1:0 prio 1 u32 \

match ip dst 192.168.0.83/32 flowid 1:10Only traffic from ceph-zone1 to ceph-zone2 (192.168.0.83) is affected. All other traffic remains fast.

Optional: Set Up Reverse Direction (Zone2 → Zone1)

Repeat all steps on ceph-zone2, but change the match ip dst to 192.168.0.82 so that shaping applies in the other direction.

Test the Simulation

Install iperf3 on both nodes:

# On ceph-zone2

iperf3 -s

# On ceph-zone1

iperf3 -c 192.168.0.83Before tc Shaping

Connecting to host 192.168.0.83, port 5201

[ 5] local 192.168.0.82 port 34578 connected to 192.168.0.83 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 46.6 MBytes 391 Mbits/sec 193 82.0 KBytes

[ 5] 1.00-2.00 sec 50.6 MBytes 425 Mbits/sec 139 137 KBytes

[ 5] 2.00-3.00 sec 48.0 MBytes 403 Mbits/sec 200 134 KBytes

[ 5] 3.00-4.00 sec 51.6 MBytes 433 Mbits/sec 167 105 KBytes

[ 5] 4.00-5.00 sec 48.0 MBytes 403 Mbits/sec 181 107 KBytes

[ 5] 5.00-6.00 sec 43.1 MBytes 362 Mbits/sec 263 62.2 KBytes

[ 5] 6.00-7.00 sec 44.6 MBytes 374 Mbits/sec 198 129 KBytes

[ 5] 7.00-8.00 sec 49.2 MBytes 413 Mbits/sec 234 70.7 KBytes

[ 5] 8.00-9.00 sec 53.2 MBytes 447 Mbits/sec 137 90.5 KBytes

[ 5] 9.00-10.00 sec 55.2 MBytes 463 Mbits/sec 153 158 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 490 MBytes 411 Mbits/sec 1865 sender

[ 5] 0.00-10.00 sec 489 MBytes 410 Mbits/sec receiver

iperf Done.After tc Shaping

Connecting to host 192.168.0.83, port 5201

[ 5] local 192.168.0.82 port 50152 connected to 192.168.0.83 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1.12 MBytes 9.43 Mbits/sec 0 226 KBytes

[ 5] 1.00-2.00 sec 1.38 MBytes 11.5 Mbits/sec 52 342 KBytes

[ 5] 2.00-3.00 sec 1.00 MBytes 8.39 Mbits/sec 36 334 KBytes

[ 5] 3.00-4.00 sec 896 KBytes 7.31 Mbits/sec 0 375 KBytes

[ 5] 4.00-5.00 sec 0.00 Bytes 0.00 bits/sec 21 264 KBytes

[ 5] 5.00-6.00 sec 896 KBytes 7.34 Mbits/sec 0 284 KBytes

[ 5] 6.00-7.00 sec 0.00 Bytes 0.00 bits/sec 0 304 KBytes

[ 5] 7.00-8.01 sec 1.00 MBytes 8.31 Mbits/sec 0 315 KBytes

[ 5] 8.01-9.00 sec 896 KBytes 7.44 Mbits/sec 0 320 KBytes

[ 5] 9.00-10.01 sec 0.00 Bytes 0.00 bits/sec 0 321 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.01 sec 7.12 MBytes 5.97 Mbits/sec 109 sender

[ 5] 0.00-10.55 sec 5.62 MBytes 4.47 Mbits/sec receiver

iperf Done.| Metric | Value | Interpretation |

|---|---|---|

| Avg Bitrate (sender) | ~5.97 Mbit/s | Matches configured htb + netem shaping |

| Latency impact | Intermittent 0 throughput | Due to buffering/queue limit |

| Retransmissions | 109 | Acceptable in a WAN-like setup |

| TCP Cwnd fluctuations | 226 KB → 321 KB | Normal TCP behavior under simulated delay |

To Remove the Shaping

sudo tc qdisc del dev ens32 rootThis deletes all shaping and resets the interface.

Summary

| Component | Description |

|---|---|

htb | Bandwidth shaping with class isolation |

class 1:10 | Target class for slow traffic |

netem | Adds simulated delay |

u32 filter | Targets only inter-zone traffic |

Did this guide save you time?

Support this site